Navigation

The Navigation subsystem within the robot is broken down into 4 key aspects: Mapping, Path Planning, Obstacle Avoidance and Motor Control.

ROS

ROS is used to manage the communication between the nodes used by the robot. Most notably, the camera node publishes rgb and depth frames obtained from the Kinect sensor to be used by RTABMAP for visual odometry and SLAM. RTABMAP outputs a grid map of the environment that can be used by the move_base package to plan and traverse the path needed by the robot to reach it's destination.

Mapping

The system makes use of RTAB-Map (Real-Time Appearance-Based Mapping), a type of SLAM (Simultaneous localization and mapping) Technology. This is used under ROS with a Kinect Camera to generate a 3D point clouds of the hospital environment.

Path Planning

The robot uses the move_base ros package to produce a global costmap from map produced by rtabmap and a local costmap from LiDAR scans. A navigation goal can be sent to move_base via a server-client interface (actionlib). Move_base then plans global (high-level) and local (low-level) paths to the goal and sends velocity commands to the robot to reach it.

Obstacle Avoidance

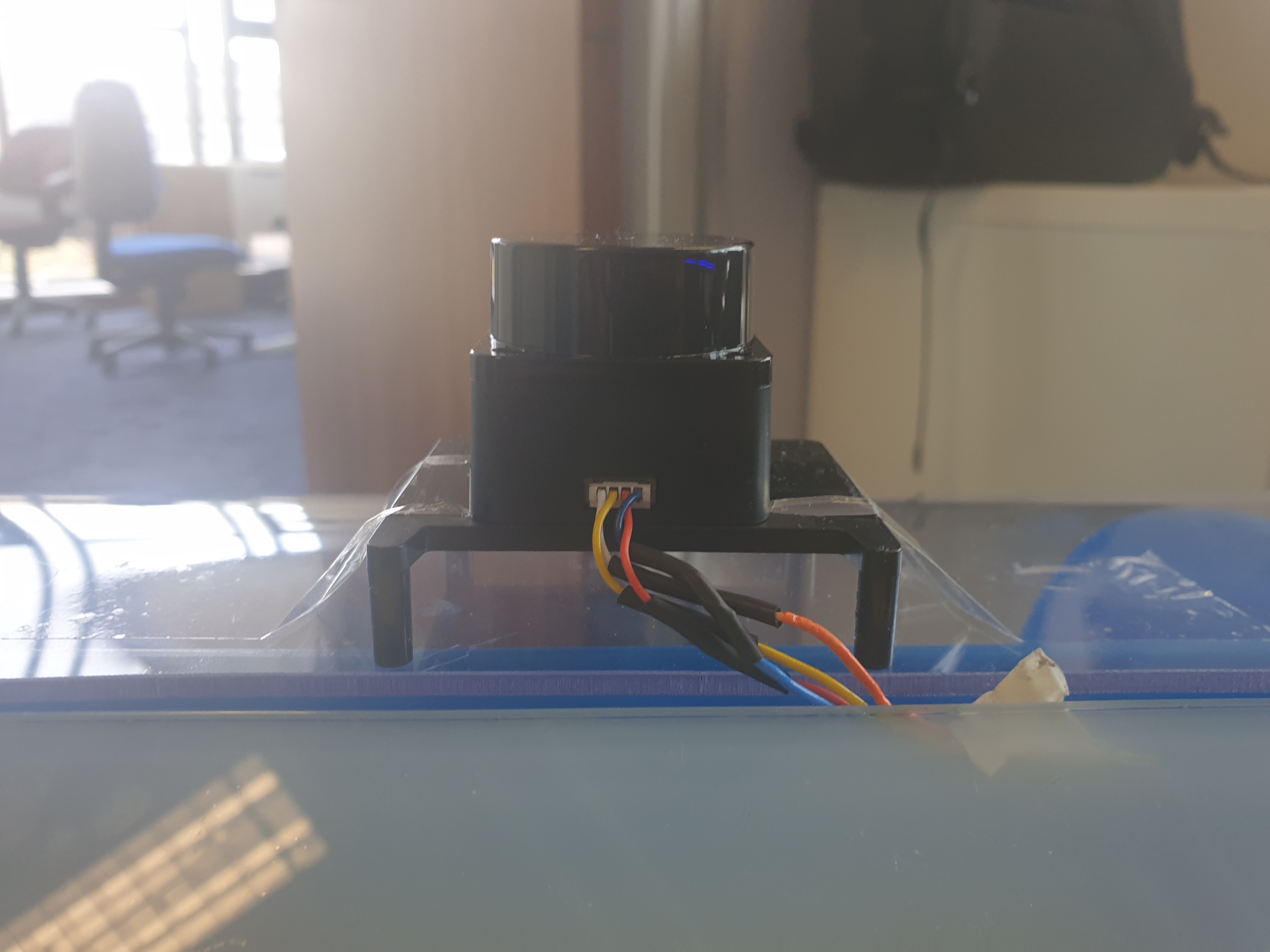

The LiDAR mounted on the top of the robot integrates with the rtabmap package. It updates the global map when any obstacles are detected allowing for collision avoidance while also aiding odometry thus improving reliability of autonomous drive.

Motor Control

The Maintanence Team can use keyboard control to remotely drive the robot around the hospital, allowing it to map the rooms. For autonomous drive, The Raspberry Pi publishes velocities to the 'cmd_vel' topic. The Arduino that controls the motors recieves these velocities and drives the wheels.

For more information, our development justifications for sensors, including testing and development of RTAB-Map, and Motor Development here.